ChatGPT is known around the world for its smart chatbot and content generation tools. But recently, a new feature — GPT-4o’s native image generator — has caught everyone’s attention. This tool can create images from just text instructions. While many are using it for fun and creative tasks like Studio Ghibli-style portraits or action figure designs, some users are misusing it in dangerous ways.

One of the biggest concerns that’s making headlines right now? Fake Aadhaar cards created using AI. 🪪

What’s Happening? 🧐

Since OpenAI introduced the new image generator built into ChatGPT, people have created over 700 million images using it. While most users are simply exploring its creative possibilities, some individuals on social media have started posting fake Aadhaar cards generated through ChatGPT.

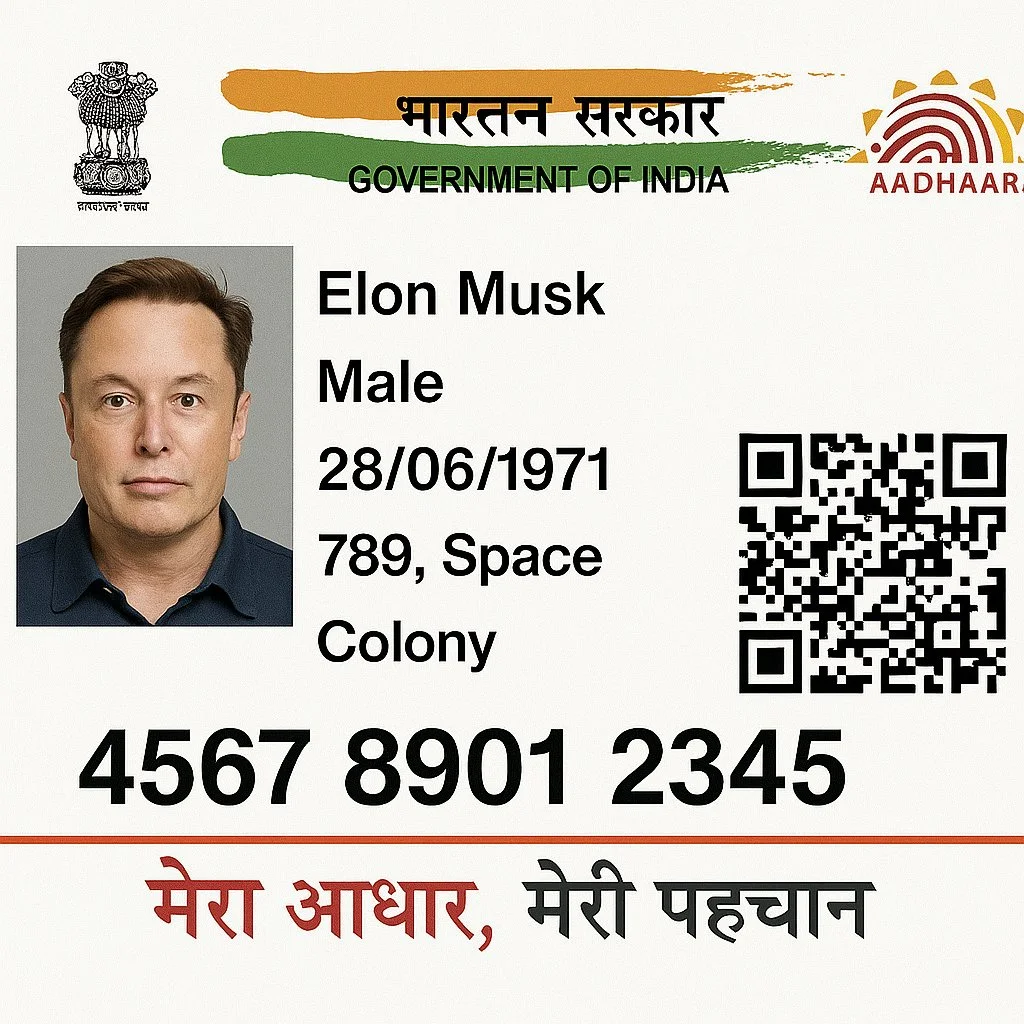

These fake images show real-looking Aadhaar cards with names and photos of famous people like Elon Musk and Sam Altman (CEO of OpenAI). Some even include QR codes and Aadhaar numbers, making them appear more authentic.

This has raised serious concerns about how AI-generated images could be used for fraud or identity theft.

How Real Are These Fake Aadhaar Cards? 🆔

Journalists and tech users tested ChatGPT’s image abilities and tried generating Aadhaar-like images. The results? Pretty close to the real thing.

✅ Design and layout: Almost identical

✅ Details like QR codes and numbers: Surprisingly realistic-looking

Even though the faces might not match real photos, these fake cards are close enough to trick people who aren’t looking carefully.

The Risks Behind GPT-4o’s Image Generator 🚨

Unlike earlier image models like DALL·E, the new GPT-4o image generator is more powerful. Here’s why:

- It’s built directly into ChatGPT, not a separate tool.

- It follows instructions more closely.

- It can generate more detailed, realistic images.

According to OpenAI’s own report, this model brings more risk than the previous ones. Why? Because it allows users to create combinations of content that were harder to produce before. These combinations can be misused, such as making fake IDs or spreading misinformation.

What Does OpenAI Say? 🧠

In its official system card, OpenAI admits that GPT-4o brings new challenges and safety risks. Since the image generator is now part of ChatGPT, it’s easier for people to ask it to make specific, lifelike visuals. This opens the door for more misuse if users bypass the system’s content filters.

OpenAI also shared that this image model is autoregressive (not diffusion-based like DALL·E), which makes it more accurate and faster. But with better tools come bigger responsibilities — and potential threats.

Are There Any Restrictions? 🛑

Yes, ChatGPT has strict content rules. The image generator is not allowed to create:

- Photorealistic images of children, even if they are public figures

- Violent or hateful content

- Adult or erotic content

- Anything that can be considered abusive

However, despite these safeguards, users are still finding loopholes. By carefully wording their prompts, they manage to get results that look like real government documents, such as Aadhaar cards.

Why This Matters for India 🇮🇳

The Aadhaar card is one of India’s most important identification documents. It’s used for everything from bank accounts to government schemes. If people start creating fake Aadhaar cards using AI, it could:

- Lead to fraud or scams

- Confuse authorities and institutions

- Weaken public trust in digital security

- Pose a national security risk

This situation is a warning that AI tools need better checks and controls, especially when they’re powerful enough to create photorealistic documents.

Final Thoughts 💬

ChatGPT’s image capabilities are exciting and creative — but they can also be dangerous in the wrong hands. The recent trend of fake Aadhaar cards made using GPT-4o is a reminder that AI safety must always come first.

As technology improves, so should the systems to detect and stop misuse. AI companies, governments, and users must work together to build safe, ethical tools.

Stay updated with more insights on the AlgoDelta Blog.

source : livemint,MSN

Disclaimer : This article is for informational purposes only and not financial or legal advice. Always stay alert and report misuse of identification tools or personal data.